As your website gets more popular, you may find that your server is getting more and more strained with the extra traffic load. There are many things you can do to take the strain off of an overworked web server, but this week, we’re going to look at load balancing.

The basic idea behind load balancing is that instead of having just one web server with all your code running on it, you have multiple. Let’s say that we set up 3 web servers and deploy your code to each one. This means that any of these 3 servers could handle an incoming request, but now you need something to decide which of the 3 servers should be given each next request.

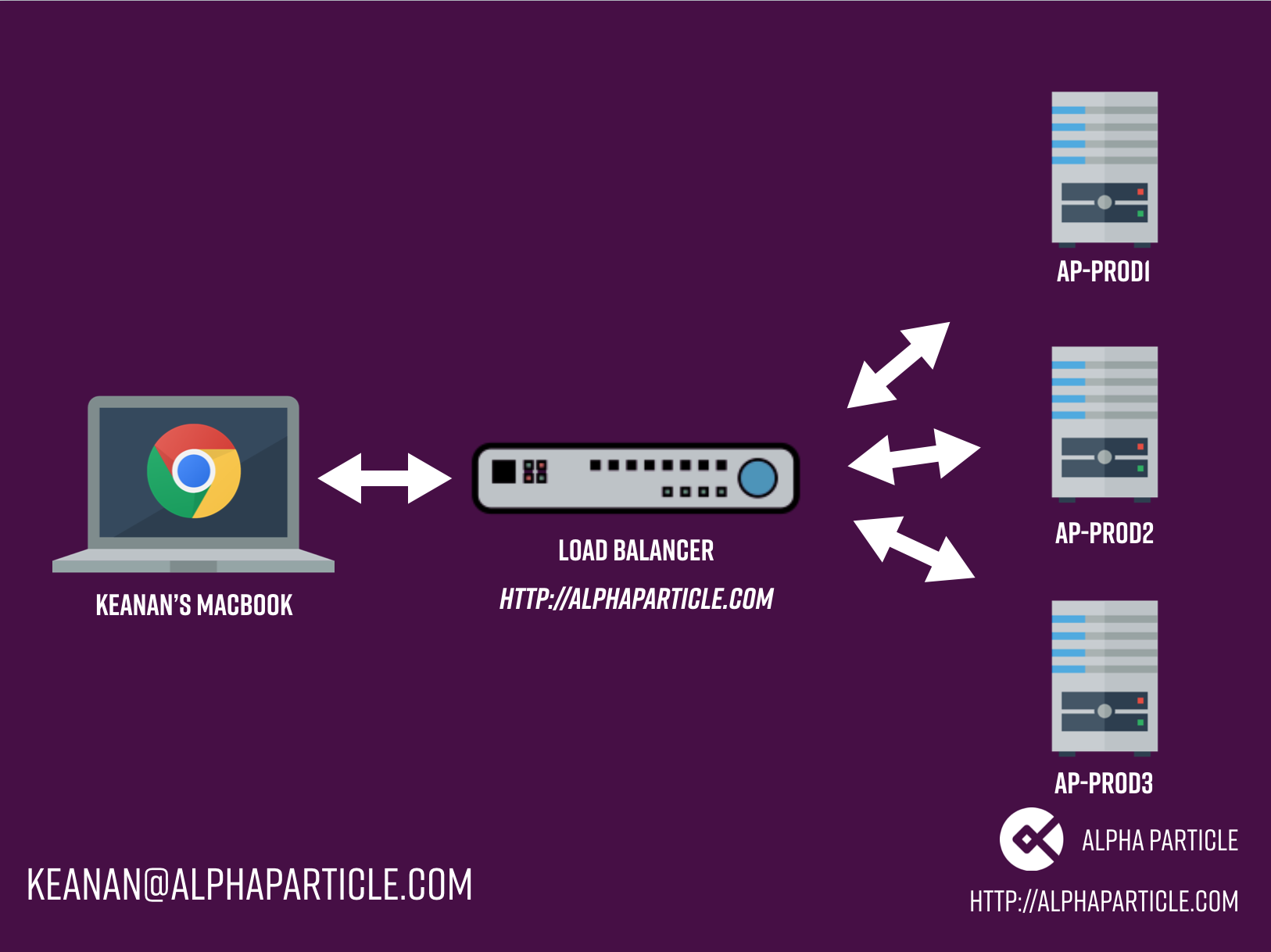

That’s where a load balancer comes in. A load balancer is what handles all the incoming traffic and decided which server should handle the request. It takes an incoming request and routes it to a specific server as well as handles returning the response to the user’s browser. For an example of how this works, take a look at the diagram below.

To handle all this traffic shuffling, a load balancer can use a variety of algorithms. Ultimately, choosing which algorithm to use depends on how your site functions, but the two most popular options are:

- Round Robin: Each subsequent request is allocated to the group of servers sequentially based on when the request comes in.

- Least Connections: When a new request comes in, the load balancer looks at which server has the least open connections and allocates the new request to that server. This means that generally, the servers will stay the most balanced.

These can be configured on the load balancer itself and after you decide on an algorithm you should continue to monitor your servers to make sure none of them are getting overloaded.

While load balancers are great, implementing a more complicated server architecture is not without it’s challenges. In particular, if the browser and the server need to exchange information specific to the user (for example, items in a shopping cart), then it’s important that the same server be used for the entirety of the user’s session. In this case, you can utilize a concept known as “sticky sessions” (also known as session persistence or session affinity). With sticky sessions enabled, the load balancer will ensure that a single user’s request is passed to the same backend server on each request in a given session.

To learn more about load balancers, you or your development team can read the documentation for your infrastructure provider using some of the links below:

With one of these solutions in place, you will ensure that your website can scale to any level of traffic by adding more servers and letting the load balancer handle the routing behind the scenes.

Curious about whether a load balancer might work for your product? Use the contact form below to let us help you determine your infrastructure needs.